Having A Provocative Deepseek Works Only Under These Conditions

페이지 정보

본문

Unlike many proprietary models, Deepseek is open-source. Analyzing campaign performance, producing customer segmentation fashions, and automating content creation. This folder additionally incorporates highly effective textual content generation and coding models, obtainable for free deepseek. Deep Seek Coder was educated utilizing in depth datasets, including real textual content and code from repositories like GitHub, fragments from software program forums and web sites, and additional sources akin to code assessments. On condition that the perform under test has private visibility, it cannot be imported and can only be accessed using the same package. You can insert your code into the Javascript node, or ask the JS AI assistant to write, explain, modify, and debug it. Each token represents a phrase, command, or symbol in code or pure language. Of all the datasets used for coaching, 13% consisted of natural language and 87% of code, encompassing eighty different programming languages. With this comprehensive coaching, DeepSeek Coder has learned to utilize billions of tokens discovered on-line.

Unlike many proprietary models, Deepseek is open-source. Analyzing campaign performance, producing customer segmentation fashions, and automating content creation. This folder additionally incorporates highly effective textual content generation and coding models, obtainable for free deepseek. Deep Seek Coder was educated utilizing in depth datasets, including real textual content and code from repositories like GitHub, fragments from software program forums and web sites, and additional sources akin to code assessments. On condition that the perform under test has private visibility, it cannot be imported and can only be accessed using the same package. You can insert your code into the Javascript node, or ask the JS AI assistant to write, explain, modify, and debug it. Each token represents a phrase, command, or symbol in code or pure language. Of all the datasets used for coaching, 13% consisted of natural language and 87% of code, encompassing eighty different programming languages. With this comprehensive coaching, DeepSeek Coder has learned to utilize billions of tokens discovered on-line.

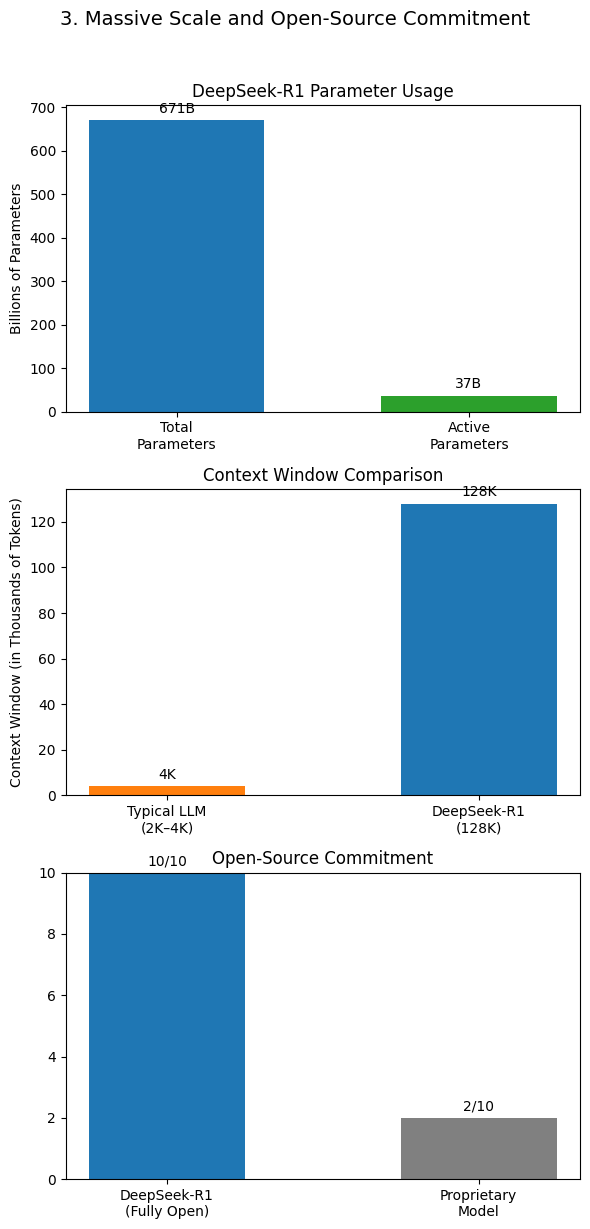

You'll see two fields: User Prompt and Max Tokens. Leveraging the self-consideration mechanism from the Transformer structure, the model can weigh the significance of various tokens in an enter sequence, capturing advanced dependencies throughout the code. These elements improve the mannequin's capability to generate, optimize, and perceive complicated code. This mannequin incorporates varied elements of the Transformer and Mixture-to-Expert architectures, including attention mechanisms and data deduplication strategies to optimize efficiency and effectivity. OpenAI and its partners simply announced a $500 billion Project Stargate initiative that may drastically speed up the development of green energy utilities and AI knowledge centers throughout the US. Nvidia alone skilled a staggering decline of over $600 billion. The biggest version, DeepSeek Coder V2, has 236 billion parameters, which are the numeric models all fashions use to perform. And we hear that a few of us are paid greater than others, in response to the "diversity" of our dreams. Much like the others, this does not require a bank card. From builders leveraging the Deepseek R1 Lite for fast coding help to writers utilizing AI-pushed content creation tools, this app delivers unparalleled worth. Users have reported that the response sizes from Opus inside Cursor are limited in comparison with using the mannequin instantly by way of the Anthropic API.

Created instead to Make and Zapier, this service permits you to create workflows utilizing motion blocks, triggers, and no-code integrations with third-celebration apps and AI fashions like deep seek (https://bikeindex.org/users/deepseek1) Coder. Direct integrations embody apps like Google Sheets, Airtable, GMail, Notion, and dozens extra. As OpenAI and Google continue to push the boundaries of what's attainable, the way forward for AI seems brighter and more clever than ever earlier than. Latenode provides varied set off nodes, together with schedule nodes, webhooks, and actions in third-celebration apps, like including a row in a Google Spreadsheet. To seek out the block for this workflow, go to Triggers ➨ Core Utilities and choose Trigger on Run Once. Upcoming variations of DevQualityEval will introduce extra official runtimes (e.g. Kubernetes) to make it easier to run evaluations on your own infrastructure. The Code Interpreter SDK lets you run AI-generated code in a secure small VM - E2B sandbox - for AI code execution. Layer normalization ensures the coaching course of remains stable by retaining the parameter values inside a reasonable range, preventing them from becoming too massive or too small. This process removes redundant snippets, focusing on essentially the most relevant ones and maintaining the structural integrity of your codebase.

Due to this, you can write snippets, distinguish between working and damaged commands, understand their functionality, debug them, and more. Simply put, the more parameters there are, the extra information the model can course of, main to better and more detailed solutions. There will be benchmark knowledge leakage/overfitting to benchmarks plus we do not know if our benchmarks are accurate sufficient for the SOTA LLMs. Latest iterations are Claude 3.5 Sonnet and Gemini 2.0 Flash/Flash Thinking. Benchmarks constantly present that DeepSeek-V3 outperforms GPT-4o, Claude 3.5, and Llama 3.1 in multi-step drawback-solving and contextual understanding. This enables for extra accuracy and recall in areas that require a longer context window, together with being an improved model of the previous Hermes and Llama line of models. Whether you're dealing with massive datasets or running complex workflows, Deepseek's pricing construction permits you to scale effectively with out breaking the financial institution. This approach permits Deep Seek Coder to handle advanced datasets and duties with out overhead.

- 이전글How to Become Better With Try Gpt Chat In 10 Minutes 25.02.12

- 다음글Рейтинг лучших казино: Встречайте новые игровые механики 25.02.12

댓글목록

등록된 댓글이 없습니다.