Warning Signs on Deepseek It's Best to Know

페이지 정보

본문

So what did DeepSeek announce? The mannequin, DeepSeek V3, was developed by the AI agency deepseek ai (https://postgresconf.org/users/deepseek-1) and was launched on Wednesday underneath a permissive license that allows developers to obtain and modify it for many purposes, including commercial ones. Our MTP strategy primarily goals to improve the performance of the main model, so during inference, we will immediately discard the MTP modules and the main mannequin can perform independently and normally. Problem-Solving and Decision Support:The mannequin aids in complex drawback-solving by offering data-pushed insights and actionable suggestions, making it an indispensable companion for enterprise, science, and daily resolution-making. The PHLX Semiconductor Index (SOX) dropped greater than 9%. Networking options and hardware accomplice stocks dropped along with them, together with Dell (Dell), Hewlett Packard Enterprise (HPE) and Arista Networks (ANET). The fast ascension of DeepSeek has investors frightened it may threaten assumptions about how a lot aggressive AI models cost to develop, as well because the sort of infrastructure wanted to support them, with extensive-reaching implications for the AI marketplace and Big Tech shares. I take responsibility. I stand by the publish, together with the 2 biggest takeaways that I highlighted (emergent chain-of-thought through pure reinforcement learning, and the facility of distillation), and I mentioned the low value (which I expanded on in Sharp Tech) and chip ban implications, but these observations have been too localized to the present state-of-the-art in AI.

DeepSeek, a Chinese startup founded by hedge fund manager Liang Wenfeng, was based in 2023 in Hangzhou, China, the tech hub residence to Alibaba (BABA) and many of China’s different excessive-flying tech giants. Shares of AI chipmaker Nvidia (NVDA) and a slew of different stocks associated to AI sold off Monday as an app from Chinese AI startup DeepSeek boomed in popularity. Citi analysts, who said they anticipate AI companies to continue shopping for its advanced chips, maintained a "purchase" rating on Nvidia. Wedbush referred to as Monday a "golden shopping for opportunity" to own shares in ChatGPT backer Microsoft (MSFT), Alphabet, Palantir (PLTR), and different heavyweights of the American AI ecosystem that had come below strain. China's entry to its most sophisticated chips and American AI leaders like OpenAI, Anthropic, and Meta Platforms (META) are spending billions of dollars on improvement. Shares of American AI chipmakers together with Nvidia, Broadcom (AVGO) and AMD (AMD) bought off, together with these of international companions like TSMC (TSM). Intel had additionally made 10nm (TSMC 7nm equivalent) chips years earlier utilizing nothing however DUV, but couldn’t achieve this with profitable yields; the concept SMIC could ship 7nm chips utilizing their present equipment, particularly if they didn’t care about yields, wasn’t remotely stunning - to me, anyways.

DeepSeek, a Chinese startup founded by hedge fund manager Liang Wenfeng, was based in 2023 in Hangzhou, China, the tech hub residence to Alibaba (BABA) and many of China’s different excessive-flying tech giants. Shares of AI chipmaker Nvidia (NVDA) and a slew of different stocks associated to AI sold off Monday as an app from Chinese AI startup DeepSeek boomed in popularity. Citi analysts, who said they anticipate AI companies to continue shopping for its advanced chips, maintained a "purchase" rating on Nvidia. Wedbush referred to as Monday a "golden shopping for opportunity" to own shares in ChatGPT backer Microsoft (MSFT), Alphabet, Palantir (PLTR), and different heavyweights of the American AI ecosystem that had come below strain. China's entry to its most sophisticated chips and American AI leaders like OpenAI, Anthropic, and Meta Platforms (META) are spending billions of dollars on improvement. Shares of American AI chipmakers together with Nvidia, Broadcom (AVGO) and AMD (AMD) bought off, together with these of international companions like TSMC (TSM). Intel had additionally made 10nm (TSMC 7nm equivalent) chips years earlier utilizing nothing however DUV, but couldn’t achieve this with profitable yields; the concept SMIC could ship 7nm chips utilizing their present equipment, particularly if they didn’t care about yields, wasn’t remotely stunning - to me, anyways.

The existence of this chip wasn’t a surprise for those paying shut attention: SMIC had made a 7nm chip a year earlier (the existence of which I had noted even earlier than that), and TSMC had shipped 7nm chips in volume using nothing however DUV lithography (later iterations of 7nm have been the first to make use of EUV). 8. Click Load, and the mannequin will load and is now ready for use. Then you definitely might want to run the mannequin regionally. DeepSeek is also gaining recognition amongst developers, especially those fascinated with privacy and AI models they can run on their own machines. Simply put, the extra parameters there are, the extra data the model can process, leading to better and more detailed solutions. Moreover, lots of the breakthroughs that undergirded V3 were actually revealed with the discharge of the V2 model last January. 100M, and R1’s open-supply launch has democratized entry to state-of-the-art AI. In other words, you take a bunch of robots (here, some comparatively simple Google bots with a manipulator arm and eyes and mobility) and give them entry to a large mannequin. Is that this model naming convention the best crime that OpenAI has committed?

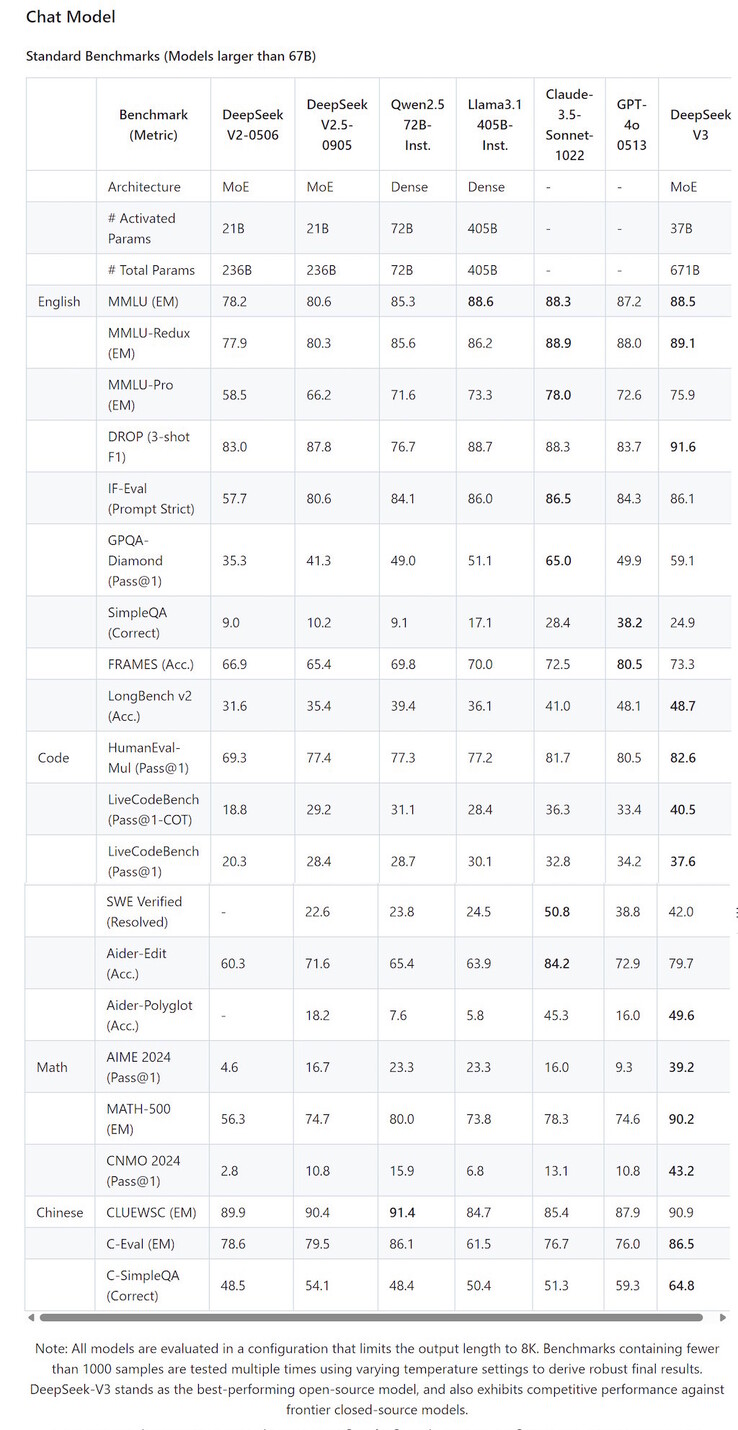

So is OpenAI screwed? One thing that distinguishes DeepSeek from competitors resembling OpenAI is that its fashions are 'open source' - which means key elements are free for anybody to entry and modify, although the company hasn't disclosed the info it used for coaching. MoE splits the model into a number of "experts" and only activates the ones which can be essential; GPT-four was a MoE model that was believed to have 16 experts with roughly one hundred ten billion parameters every. Among the four Chinese LLMs, Qianwen (on both Hugging Face and Model Scope) was the one mannequin that mentioned Taiwan explicitly. While coaching OpenAI’s model price almost $100 million, the Chinese startup made it a whopping 16 times cheaper. This overlap ensures that, as the model additional scales up, as long as we maintain a continuing computation-to-communication ratio, we will still employ nice-grained experts across nodes while attaining a close to-zero all-to-all communication overhead. To set the context straight, GPT-4o and Claude 3.5 Sonnet failed all of the reasoning and math questions, while only Gemini 2.0 1206 and o1 managed to get them right. The most proximate announcement to this weekend’s meltdown was R1, a reasoning model that's much like OpenAI’s o1.

- 이전글Some Effective Essential Oils That End Up Being Provided At Your House 25.02.03

- 다음글High 10 YouTube Clips About Deepseek 25.02.03

댓글목록

등록된 댓글이 없습니다.