DeepSeek: the Chinese aI App that has The World Talking

페이지 정보

본문

While it’s not the most practical model, DeepSeek V3 is an achievement in some respects. It’s price remembering that you may get surprisingly far with somewhat outdated know-how. Here’s a enjoyable paper where researchers with the Lulea University of Technology construct a system to help them deploy autonomous drones deep seek underground for the purpose of gear inspection. How much company do you've over a know-how when, to use a phrase recurrently uttered by Ilya Sutskever, AI know-how "wants to work"? These payments have obtained vital pushback with critics saying this might characterize an unprecedented stage of government surveillance on people, and would contain residents being treated as ‘guilty until confirmed innocent’ rather than ‘innocent until confirmed guilty’. Why this issues - language models are a broadly disseminated and understood expertise: Papers like this show how language fashions are a class of AI system that may be very properly understood at this level - there are actually quite a few teams in countries around the globe who've shown themselves in a position to do finish-to-end improvement of a non-trivial system, from dataset gathering by means of to architecture design and subsequent human calibration. In case you look closer at the outcomes, it’s value noting these numbers are closely skewed by the easier environments (BabyAI and Crafter).

While it’s not the most practical model, DeepSeek V3 is an achievement in some respects. It’s price remembering that you may get surprisingly far with somewhat outdated know-how. Here’s a enjoyable paper where researchers with the Lulea University of Technology construct a system to help them deploy autonomous drones deep seek underground for the purpose of gear inspection. How much company do you've over a know-how when, to use a phrase recurrently uttered by Ilya Sutskever, AI know-how "wants to work"? These payments have obtained vital pushback with critics saying this might characterize an unprecedented stage of government surveillance on people, and would contain residents being treated as ‘guilty until confirmed innocent’ rather than ‘innocent until confirmed guilty’. Why this issues - language models are a broadly disseminated and understood expertise: Papers like this show how language fashions are a class of AI system that may be very properly understood at this level - there are actually quite a few teams in countries around the globe who've shown themselves in a position to do finish-to-end improvement of a non-trivial system, from dataset gathering by means of to architecture design and subsequent human calibration. In case you look closer at the outcomes, it’s value noting these numbers are closely skewed by the easier environments (BabyAI and Crafter).

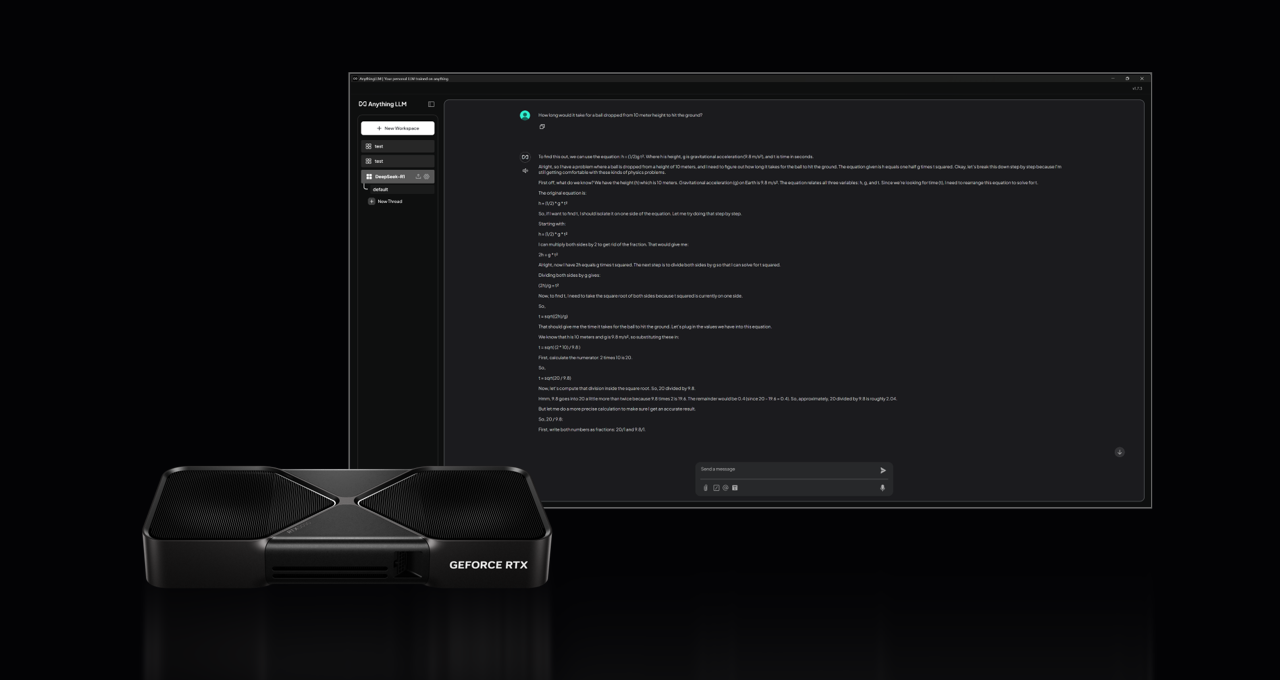

Why this issues: First, it’s good to remind ourselves that you are able to do an enormous quantity of beneficial stuff with out reducing-edge AI. That's, they'll use it to improve their very own basis mannequin so much sooner than anybody else can do it. Models developed for this problem must be portable as properly - model sizes can’t exceed 50 million parameters. Evaluating massive language fashions skilled on code. In this paper, we introduce DeepSeek-V3, a big MoE language model with 671B total parameters and 37B activated parameters, trained on 14.8T tokens. We report the professional load of the 16B auxiliary-loss-based baseline and the auxiliary-loss-free model on the Pile test set. Auxiliary-loss-free load balancing technique for mixture-of-consultants. Noune et al. (2022) B. Noune, P. Jones, D. Justus, D. Masters, and C. Luschi. Suzgun et al. (2022) M. Suzgun, N. Scales, N. Schärli, S. Gehrmann, Y. Tay, H. W. Chung, A. Chowdhery, Q. V. Le, E. H. Chi, D. Zhou, et al. Kwiatkowski et al. (2019) T. Kwiatkowski, J. Palomaki, O. Redfield, M. Collins, A. P. Parikh, C. Alberti, D. Epstein, I. Polosukhin, J. Devlin, K. Lee, K. Toutanova, L. Jones, M. Kelcey, M. Chang, A. M. Dai, J. Uszkoreit, Q. Le, and S. Petrov.

Why this issues: First, it’s good to remind ourselves that you are able to do an enormous quantity of beneficial stuff with out reducing-edge AI. That's, they'll use it to improve their very own basis mannequin so much sooner than anybody else can do it. Models developed for this problem must be portable as properly - model sizes can’t exceed 50 million parameters. Evaluating massive language fashions skilled on code. In this paper, we introduce DeepSeek-V3, a big MoE language model with 671B total parameters and 37B activated parameters, trained on 14.8T tokens. We report the professional load of the 16B auxiliary-loss-based baseline and the auxiliary-loss-free model on the Pile test set. Auxiliary-loss-free load balancing technique for mixture-of-consultants. Noune et al. (2022) B. Noune, P. Jones, D. Justus, D. Masters, and C. Luschi. Suzgun et al. (2022) M. Suzgun, N. Scales, N. Schärli, S. Gehrmann, Y. Tay, H. W. Chung, A. Chowdhery, Q. V. Le, E. H. Chi, D. Zhou, et al. Kwiatkowski et al. (2019) T. Kwiatkowski, J. Palomaki, O. Redfield, M. Collins, A. P. Parikh, C. Alberti, D. Epstein, I. Polosukhin, J. Devlin, K. Lee, K. Toutanova, L. Jones, M. Kelcey, M. Chang, A. M. Dai, J. Uszkoreit, Q. Le, and S. Petrov.

Bai et al. (2022) Y. Bai, S. Kadavath, S. Kundu, A. Askell, J. Kernion, A. Jones, A. Chen, A. Goldie, A. Mirhoseini, C. McKinnon, et al. Vaswani et al. (2017) A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Lepikhin et al. (2021) D. Lepikhin, H. Lee, Y. Xu, D. Chen, O. Firat, Y. Huang, M. Krikun, N. Shazeer, and Z. Chen. Huang et al. (2023) Y. Huang, Y. Bai, Z. Zhu, J. Zhang, J. Zhang, T. Su, J. Liu, C. Lv, Y. Zhang, J. Lei, et al. Wang et al. (2024b) Y. Wang, X. Ma, G. Zhang, Y. Ni, A. Chandra, S. Guo, W. Ren, A. Arulraj, X. He, Z. Jiang, T. Li, M. Ku, K. Wang, A. Zhuang, R. Fan, X. Yue, and W. Chen. Xi et al. (2023) H. Xi, C. Li, J. Chen, and J. Zhu. Lundberg (2023) S. Lundberg. Combined with the framework of speculative decoding (Leviathan et al., 2023; Xia et al., 2023), it could significantly speed up the decoding speed of the model. As well as, we add a per-token KL penalty from the SFT model at each token to mitigate overoptimization of the reward model. Based on our evaluation, the acceptance rate of the second token prediction ranges between 85% and 90% across numerous technology matters, demonstrating constant reliability.

A natural query arises concerning the acceptance price of the moreover predicted token. In K. Inui, J. Jiang, V. Ng, and X. Wan, editors, Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 5883-5889, Hong Kong, China, Nov. 2019. Association for Computational Linguistics. Within the Thirty-eighth Annual Conference on Neural Information Processing Systems. We imagine that this paradigm, which combines supplementary data with LLMs as a suggestions source, is of paramount significance. Etc and so forth. There could literally be no benefit to being early and every benefit to ready for LLMs initiatives to play out. Before we enterprise into our evaluation of coding environment friendly LLMs. C-Eval: A multi-degree multi-discipline chinese evaluation suite for foundation models. AGIEval: A human-centric benchmark for evaluating basis fashions. DeepSeek-AI (2024a) DeepSeek-AI. Deepseek-coder-v2: Breaking the barrier of closed-supply fashions in code intelligence. What's artificial intelligence? "Along one axis of its emergence, digital materialism names an ultra-hard antiformalist AI program, participating with biological intelligence as subprograms of an abstract put up-carbon machinic matrix, whilst exceeding any deliberated analysis project.

In the event you loved this short article and you would love to receive details relating to deepseek ai china; www.zerohedge.com, assure visit our own web page.

- 이전글按摩師證照 Explained 25.02.03

- 다음글Whatever They Told You About Deepseek Is Dead Wrong...And Here's Why 25.02.03

댓글목록

등록된 댓글이 없습니다.